Apple has unveiled a significant initiative to bolster the security of its AI infrastructure by launching an expanded bug bounty program. The company is offering rewards of up to $1 million to security researchers who can identify vulnerabilities within its Private Cloud Compute (PCC) servers, which underpin the Apple Intelligence features set to debut with iOS 18.1.The Verge+5India Today+5Hackread+5Hackread+1Global Village Space+1

Strengthening AI Privacy with Private Cloud Compute

Apple Intelligence, the company’s suite of AI-powered features, primarily operates on-device to ensure user privacy. However, for more complex tasks requiring substantial computational resources, data is processed through PCC—Apple’s custom-built cloud infrastructure designed with privacy at its core. These servers utilize Apple Silicon and a hardened operating system to maintain stringent security standards, ensuring that user data is ephemeral and encrypted during processing .

Comprehensive Bug Bounty Program

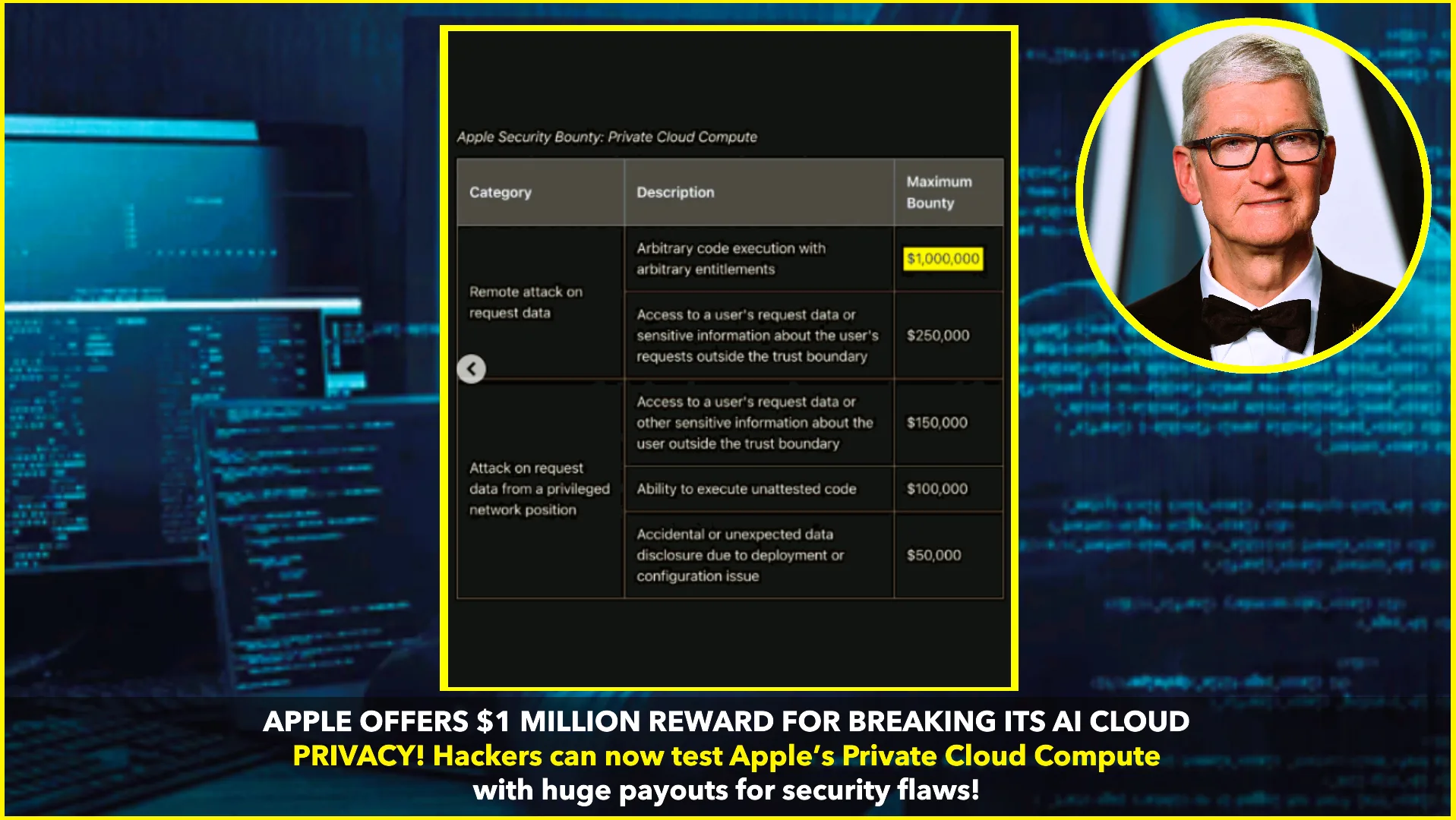

To validate the security of PCC, Apple has expanded its Security Bounty program, inviting researchers to probe the system for potential vulnerabilities. The reward structure is tiered based on the severity and nature of the discovered issues:MacRumors+7BleepingComputer+7The Hack Academy+7

- $50,000: For accidental data disclosures due to configuration flaws or system design issues.

- $100,000: For the ability to execute code that has not been certified.

- $150,000 to $250,000: For unauthorized access to user request data or sensitive information outside the defined trust boundary.

- $1,000,000: For achieving arbitrary code execution with arbitrary entitlements, representing the most critical level of vulnerability .

Apple emphasizes that it will also consider rewards for significant security issues that may not fall within these predefined categories, evaluating each report based on its quality, exploitability, and potential impact on users.Global Village Space+4Forbes+4Hackread+4

Tools for Researchers: Virtual Research Environment

To facilitate thorough security assessments, Apple has introduced a Virtual Research Environment (VRE). This tool allows researchers to analyze PCC’s software in a controlled setting on their Macs. The VRE provides capabilities such as inspecting software releases, verifying transparency logs, booting releases in a virtualized environment, and modifying PCC software for deeper investigation. Additionally, Apple has made source code for key PCC components available under a limited-use license, enabling researchers to perform in-depth analyses .

Commitment to Transparency and Security

Apple’s initiative reflects a broader commitment to transparency and user privacy in the realm of artificial intelligence. By opening PCC to external scrutiny and offering substantial incentives for vulnerability discovery, Apple aims to build trust in its AI services and ensure robust protection of user data .Global Village Space+1India Today+1

Security researchers interested in participating can access the necessary resources, including the PCC Security Guide and VRE, through Apple’s official security research platform. Submissions for the bug bounty program can be made via the Apple Security Bounty page.